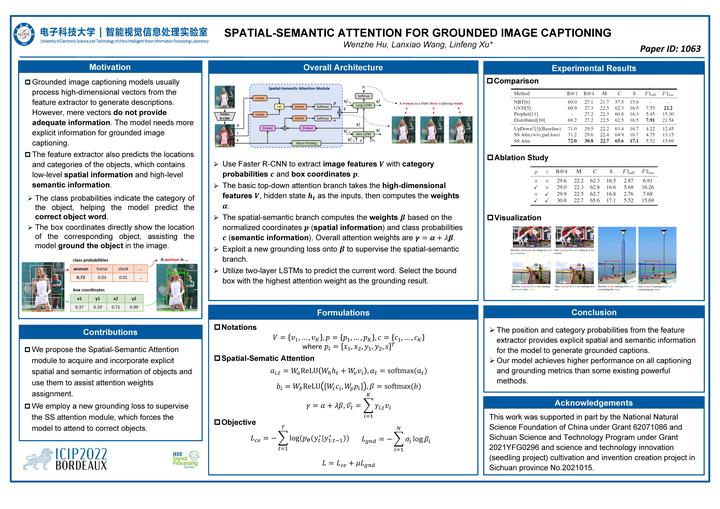

Spatial-Semantic Attention For Grounded Image Captioning

Image credit: Unsplash

Image credit: Unsplash

Abstract

Grounded image captioning models usually process highdimensional vectors from the feature extractor to generate descriptions. However, mere vectors do not provide adequate information. The model needs more explicit information for grounded image captioning. Besides high dimensional vectors, the feature extractor also predicts the locations and categories of the objects, which contains low-level spatial information and high-level semantic information. To this end, we propose a new attention module called Spatial-Semantic (SS) Attention, which utilizes the predictions from the backbone network to help the model attend to the correct objects. Specifically, the SS attention module collects the position of proposals and the class probabilities from the feature extractor as spatial and semantic information to assist attention weighting. In addition, we propose a grounding loss to supervise the SS attention. Our method achieves high performance on captioning and grounding metrics and outperforms some powerful previous models on the Flickr30k Entities dataset.