A Multi-granularity Feature Fusion Model for Pedestrian Attribute Recognition

Image credit: Unsplash

Image credit: Unsplash

Abstract

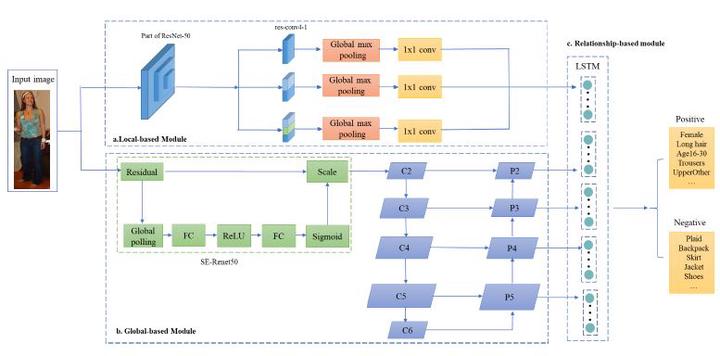

Extracting features at various scales is a great challenge for pedestrian attribute recognition (PAR). Currently the mainstream methods are to adopt the joint utilization of global and fine-grained partial features. However, the part-based algorithms mostly rely on a part localization module, such as Poselets, etc, which brings huge computational cost into the network. Moreover, the performance heavily depends on the external modules. To solve this problem, we propose a simple and effective method for PAR which jointly utilizes global and partial features without external modules. Firstly, the partial features are extracted through the multi-granularity module, with which the network can fully pay attention to the attribute features of small size and weak semantic information in pedestrian images. Meanwhile we combine partial features with multi-scale global features via the global-based module which effectively fuse edge information to obtain feature representations with multiple granularities. In addition, we adopt LSTM to model the spatial and semantic correlations between attributes. We compare with other state-of-the-art methods on three public datasets PA100k, RAP, RAPv2. Experimental results demonstrate the effectiveness of the proposed method.